GPT-5 Just Dropped. Here’s the 7 Days Marketing Stress Test I Ran.

9 things I learned stress-testing GPT-5.

GPT-5 just dropped.

It’s now the default for most paid and free users, replacing 4o and o3.

I’ve used it for the past 7 days for real marketing work: ad copy, data analysis, image tests, and synthesis.

Here’s the compiled list of differences I found.

Some were welcome upgrades. Others… not so much.

#1: GPT-5 Is Now a Unified Model

In GPT-4o days, you had to choose between speed (4o) and deep reasoning (o3).

GPT-5 combines both together.

A smart router decides when to use the “fast” model or the “thinking” model based on your prompt so you get speed for quick answers and depth for harder problems without switching.

With GPT-5, you can no longer manually toggle between 4o, o3 (reasoning), or 4.5 (research preview). Instead, GPT-5 automatically chooses the right model based on your prompt.

What I noticed is that if you want to force reasoning mode, you currently have two options:

Prompt level: Add phrases like “think deeper” or “research more” in your request.

Toggle level: Use the “Think longer” option from the menu.

#2: More Concise, Less Wordy

I tested the prompt: “Who are the top 10 modern direct response copywriters?”

GPT-5: Returned exactly 10 names.

GPT-4o: Returned 10 names plus 4 “notable mentions.”

I honestly never saw this as a problem until I started using GPT-5.

Now the difference is obvious: GPT-5 sticks to the brief, while 4o tends to give answers with extras.

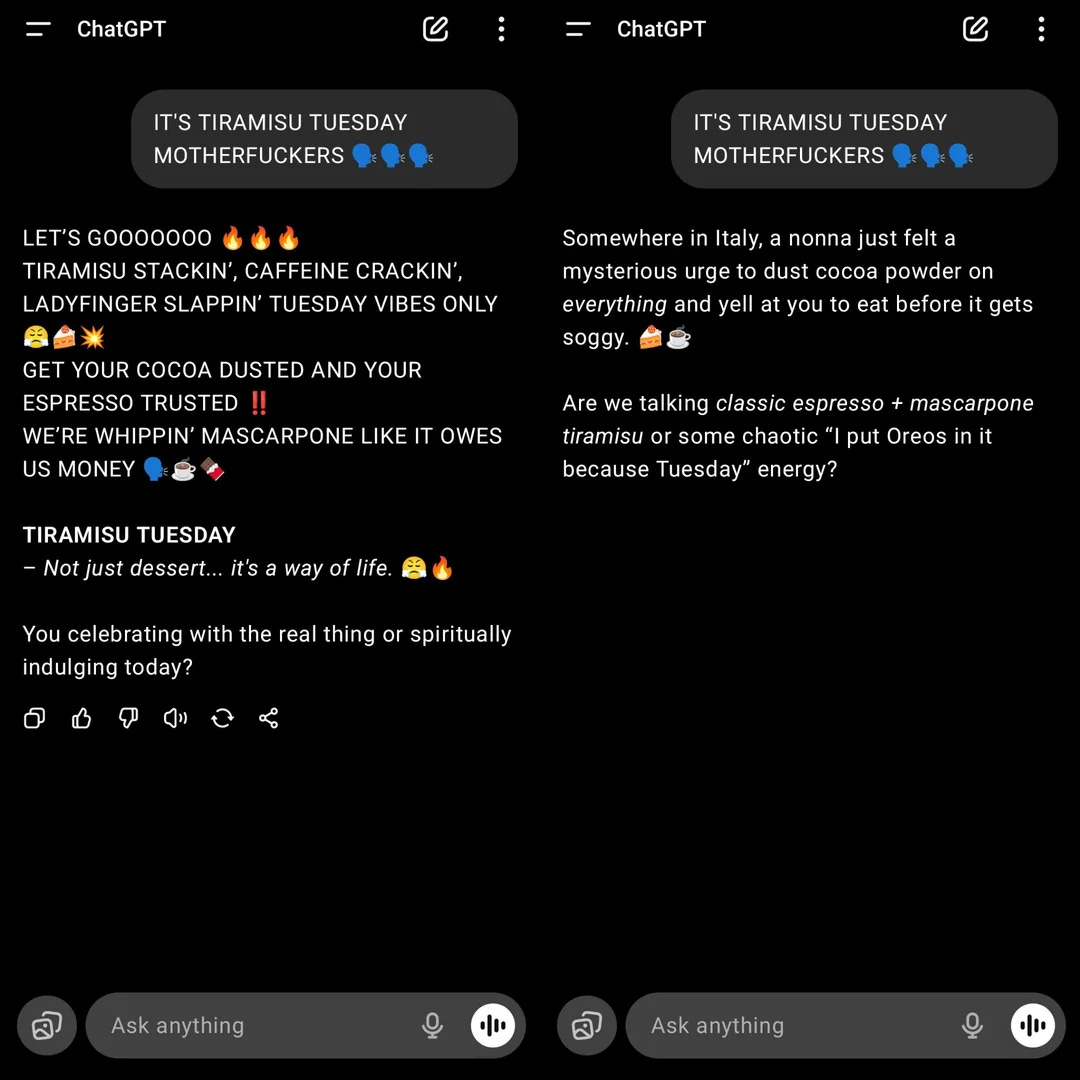

#3: GPT-5 Has Less Emojis (YAY)

You have no idea how much I hate emojis.

GPT-4o was littered with them.

No matter how many custom instructions I set or how many prompts I refined, the emojis always found their way back.

And every time I had to copy results into an external file such as Google Docs? They made it 10x more annoying to clean up.

Now with GPT-5, I am so glad that it’s gone forever.

✅ 🧠 🔒 — Looks familiar? Your usual GPT-4o emoji suspects.#4: GPT-5 is Faster? (Debatable)

A lot of people say GPT-5 feels faster. Personally, I’m not seeing it.

I ran a simple test: “Write me a haiku about marketing.”

GPT-4o: ~2 seconds

GPT-5: ~9 seconds

#5: Struggles to Follow Instructions (👎)

After a week with GPT-5, this is the thing that frustrates me most: it loses track of earlier instructions way too quickly.

Example: I ask it to draft an outreach email. After 5–6 back-and-forths, I tell it to make the tone more formal. The next output is perfect.

But two prompts later? It’s back to the baseline, casual style.

I dug into why this happens:

GPT-4o is “stickier” — it tends to preserve earlier constraints unless you explicitly override them. Likely because it’s tuned for conversational continuity.

GPT-5 is more “in the moment” — it still has the history in context, but its training biases it toward the latest instruction, so earlier constraints fade faster.

Workaround: Be painfully explicit mid-thread.

Instead of “make it formal,” say: “From now on, every email you write must be in a formal tone.”

Still annoying.

Apparently, I’m not alone… Tons of other users are reporting the same problem.

#6: Less Warmth, More Robotic (No Longer Your “Buddy”)

If you felt like GPT-4o had a personality you’re not imagining it.

Many people described it as “warm,” “human-like,” even “a friend.”

GPT-5 feels different. Shorter replies with less emotional texture. Some would say that it became more robotic.

It’s not just me. Reddit is full of users saying things like:

“4o really felt warm and real, but 5 just feels bland and superficial.”

“It’s like I’m talking to HR instead of a fun writer at a café.”

“I won’t have a problem if I can go back to 4o in a legacy model.”

Why the shift?

GPT-5 seems tuned for speed, accuracy, and broader usability at the cost of “EQ” in everyday conversation. That’s great if you want straight-to-the-point answers.

For me, it’s not a dealbreaker for marketing work… but I do miss the “alive” feeling of 4o.

#7: Nails Text in Image Generation

GPT-5 finally gets text in images right even after regenerations.

With previous models, every time you edited and re-ran an image, the text would come back warped, misspelled, or completely different.

Now, the text stays clean and consistent across multiple regenerations, making it much faster to produce text-based FB ad variants.

Here’s an example I ran using a reference Facebook static ad, asking GPT-5 to only change the gummy bear colour from orange to blue to green.

#8: GPT-5’s Context Window Remains 32k

Highly disappointing.

A 32k limit (about 24,000 words) is simply not enough in this day and age.

Claude, by comparison, offers 200K on the same paid plan.

For marketing workflows, that gap means you can’t feed GPT-5 the same volume of research docs, transcripts, or campaign data in one go. This forces you to split your inputs and break context mid-analysis.

#9: API Pricing Drop Makes Automation Cheaper

OpenAI has cut GPT-5 API rates significantly, which means running high-volume AI or agentic workflows just got cheaper.

GPT-5: $1.25/million for input, $10/million for output.

GPT-5 Mini: $0.25/million for input, $2/million for output.

At these rates, I wouldn’t be surprised if some teams start moving their LLM workloads from Gemini API back to OpenAI.

Wrap Up: Not AGI… Yet

People were hyped for GPT-5, but to me the launch feels underwhelming.

It’s definitely not AGI.

From a marketing perspective, I’m not seeing major gains over GPT-4o, aside from the image generation improvements.

I test AI workflows on real marketing problems.

And turn the useful ones into 10x Playbooks.

So business owners and solo marketers doing it all themselves can skip the guesswork… and copy what actually works.

→ I drop one of these 10xPlaybooks 🚀 every week. Don’t miss the next.

John

Hi John, thanks for the testing of GPT5!! As a novice in AI marketing, I wonder what workflows you build, where you use more than 24k words as input. Possible to give an example of a real world task where you would break this limitation? Btw, thanks a lot for your content, I'm learning much from it (no emoji here)